Intraclass Correlation

Sample Size Determination

Confidence Interval Approach/1-Way Random

Rater Effects

Input Data

Assume you are in the planning stage of an intra-rater reliability experiment, and that the one-way random rater effects design will be adopted. However, you do not know how many raters should be used to achieve a specific confidence interval width you want to achieve.

AgreeStat360 can be used to determine the optimal number of raters as well as the optimal number of ratings per rater that will meet the prescribed confidence interval width. The input data needed to run this module is described in the figure below. To allow the software to suggest the most meaningful recommendations, it is essential to provide the following:

-

The desired confidence level, although 95% is the most widely-used value.

-

The desired confidence interval length. Specifiying a range of values here allows AgreeStat360 to explore several possibilities around the interval width of interest.

Analysis with AgreeStat/360

To see how AgreeStat360 processes this dataset to produce various agreement coefficients, please play the video below. This video can also be watched on youtube.com for more clarity if needed.

Results

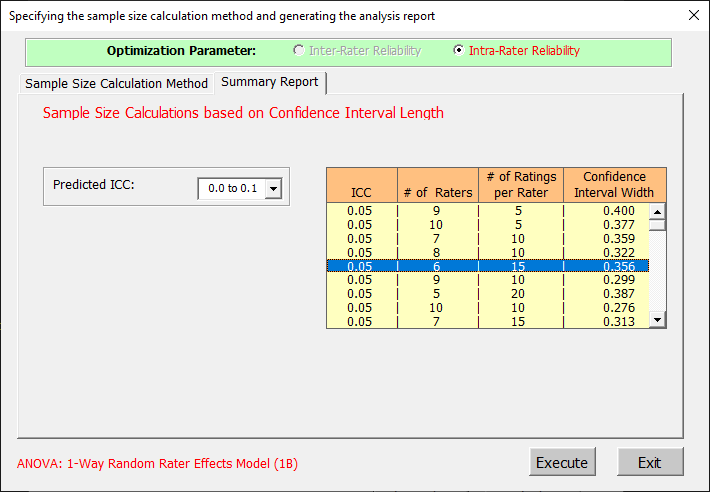

The output that AgreeStat360 produces is shown below. It contains a 3-column "Power Table" on the right side showing the confidence interval width associated with the number of raters and number of ratings per rater in the second and third column.

-

Note that the first column of the power table shows the predicted ICC.

-

You can change the range of values that the predicted ICC can take on the left side of the power table.